Hallo folks, this is Utpal and in this article I have build a CICD pipeline on AWS cloud.

AWS is the leading cloud service provider which is a subsidiary of Amazon and provides on-demand cloud computing platforms and APIs to individuals, companies, and governments, on a metered pay-as-you-go basis.

In any IT farm, there are two teams- developer and operation

team. The developers develop their code and pass it to the operation team. The operation

team deploy the code, run tests (unit test, integration test, load test, UI

test, security test etc) and do monitoring. In case of any failure or bug, they

give feedback to the developers. After the fixes when the code passes all the

tests, they are uploaded to target servers.

Now there are many problems.

The code developers develop, runs on their system. But it may not run properly on the

systems of operation team. If not, they give feedback that the code is faulty which

developers disagree. Thus, a back and forth occurs between the two teams

which takes down the efficiency, productivity and also revenue.

The whole process is manual and not interconnected in any automatic way. Thus, the whole

process takes huge time.

After successful production when new requirements come then it is not possible to de changes.

Here comes the DevOps culture to solve this problem,

increase productivity & efficiency. It improves the collaboration between developer

and operation team as they together participate in the entire software

development lifecycle. There are many automation tools implemented in the

stages of DevOps lifecycle.

At first sync up/scrum/stand up meeting occurs to plan

any development, resolve issues & challenges and do collaboration. Then codes

are developed and committed into a private code repository like AWS CodeCommit,

Bitbucket, Azure Repos etc. Then they are built to executable form and tested

to find faults, bugs etc using software like Selenium. After fixes when the

codes passed all the tests, they are released either internally or externally.

Then the codes are deployed into the target system (test/production servers)

using tools like Ansible, Chef, AWS CodeDeploy etc. They are maintained and

monitored continuously using various monitoring tools (for AWS using CloudWatch).

In case of any further problem, new requirement etc again planning started. The

whole lifecycle occurs in an automated way and for AWS cloud, CodePipeline is

an excellent service for this.

Basically,

there are four stages in DevOps- source, build, test and deploy.

In source stage

codes are pulled from repository. Here some problems occur such as merge conflict,

tightly coupled changes, repository unavailability etc.

In build stage codes are compiled, passed through unit test, style check etc and then code

artifacts are generated. Code artifact means a fully managed repository service

to securely store, publish and share software packages. Here also some problems

occur such as inconsistent configuration, log wait for build server

availability.

In test stage various tests such as integration test, load test, UI test, security

tests occur. Here also some problems occur such as inconsistency, load test

problem, going through motion.

Finally, in deploy stage the codes are deployed to staging

environment and promoted to production environment. Sometimes deployment

downtime and inconsistent rollback occur.

There are two terms in DevOps- CI and CD.

In Continuous Integration (CI) when new code is checked into repository,

automatically new build and test started in a consistent, repeatable

environment. To deploy the code, artifacts are generated. In case of failure feedbacks are sent continually.

In Continuous Deployment (CD) automatic deployments occurs to staging and

production environments without impacting customers. And they are delivered in

a faster way.

AWS CodeCommit is a Secure, scalable, and managed source control service to privately store and manage codes, binary files, documents etc. It supports the standard functionalities of Git.

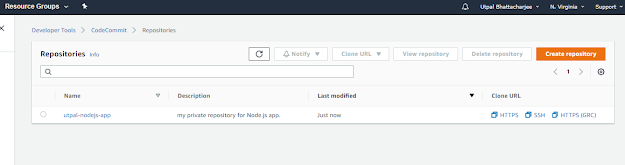

Here in this project, at first I have created a private code repository on AWS CodeCommit. Then uploaded my files on the repository via Git.

(git remote add origin https://git-codecommit.us-east-1.amazonaws.com/v1/repos/utpal-nodejs-app)

Also I have uploaded some files directly from console. Note that via the console one file can be uploaded at a time.

Here I have worked in only master branch. But multiple branched can be created, committed and merged. Also Approval rule templates

can be added for code change/addition-

Be sure to upload all the required dependencies in the repository. Editing the code can be done from the console also-

Then I have created a build project on AWS CodeBuild to compiles the source code, run tests, and produce software packages that are ready to deploy.

AWS CodeBuild is a fully managed build service that compiles source code, runs unit tests, and produces artifacts that are ready to deploy. It eliminates the need to provision, manage, and scale build servers. We can also customize build environments in CodeBuild to use own build tools.

CodeBuild uses buildspec file which is a collection of build commands and related settings in YAML format to run a build. In

this file, the version says how to interpret this file. Phases define

what will be happened during various build phases step by step. After starting aa build, we can view the phase details in the console. Here is a sample file-

version: 0.2

phases:

install:

runtime-versions:

nodejs: 10

commands:

[commands to install external package, webget/curl etc]

- .....................................................

pre_build:

commands:

[commands for setup in the pre-build phase]

- .....................................................

build:

commands:

-[commands for build and test application]

- .....................................................

- .....................................................

post_build:

commands:

[commands of post-build phase such as push artifact somewhere else etc]

- .....................................................

I have entered project name & descriptions. Also tags can be assigned. Next, the source can be CodeCommit, Github, S3, Bitbucket, Github Enterprise. I have selected CodeCommit and then proper repository.

Next in the environment CodeBuild runs docker images. The images can be managed by CodeBuild or we can select our custom image. I have selected Ubuntu OS(also there are Amazon Linux, Windows server) and standard runtime, standard 4.0 image and selected always use the latest image for the runtime version with Linux environment type.

CodeBuild IAM service role must be created/selected to do job on AWS cloud; without proper role access denied error occurs. Then timeout & queue timeout must be set. I haven't selected any certificate.

Optionally to run build in VPC, CodeBuild need to access resources in VPC and so VPC have to be selected. But I don't need and not selected. We can also specify how big the docker compute is going to be, I have selected 3 GB memory and 2 vCPUs. We can also add environment variable and parameters with KMS keys for encryption.

Next I have selected Buildspec file. By default, CodeBuild looks for a file named buildspec.yml in the root directory. If the buildspec file uses a different name or location, its path from the source root must be entered. We can also insert build commands using editor in the console. We can optionally select S3 bucket as artifact to push images. Optionally caching can be enabled to improve Codebuild performance (cache type can be local or Amazon S3). The logs of

Codebuild can be pushed to Cloudwatch log group or S3 bucket.

Then it looks like this-Here we can also edit the artifacts-

Then I have started build and it succeeded-

Here I can view the build logs and phase details which helps to fix any issue in case of failure. At first I got an error and the build failed as I had not uploaded buildspec.yml file. Then I again uploaded the file in CodeCommit and again retried the build and it succeeded. Note that CodeBuild detects build failures by exit codes. So we should ensure that the test execution returns non-zero exit code on failure. To know more visit AWS documentation.

Next, I have created a pipeline via AWS CodePipeline which is a continuous delivery service used to model, visualize, and automate the software release steps. It orchestrate the entire CI-CD pipeline. CodePipeline automates the steps required to release the software changes continuously. Load testing can be performed using 3rd party tools.

In CodePipeline, each stage can create artifacts. They are stored in S3 and passed to the next stage. For example source stage (ex. CodeCommit) stores output artifact to S3 bucket which becomes the input artifact of build stage (ex. CodeBuild). Then it stores it's output artifact to S3 bucket which becomes the input artifact of deploy stage (ex. CodeDeploy). To avoid creating multiple S3 buckets as artifact, we should chose an S3 bucket using Custom Location in the Advanced Settings. These artifacts can be encrypted.

I have entered the pipeline name and selected new service role for IAM permission (AWSCodePipelineServiceRole). Without proper IAM permission access denied errors will occur. We can open IAM management console and attach policy to the role.

In advanced settings proper artifact store location (S3 bucket) must be selected or this error will happen (which I have previously faced)- 'The action failed because either the artifact or the Amazon S3 bucket could not be found. Name of artifact bucket: codepipeline-us-east-1-*********. Verify that this bucket exists. If it exists, check the life cycle policy, then try releasing a change.'

Next, I have chosen AWS CodeCommit as the source provider. Also ECR, S3, Bitbucket and GitHub can be chosen. Then I have selected the repository and branch. There are two ways to track changes in CodeCommit (the source). The recommended way is to use CloudWatch events (an event rule will be automatically created) and alternative way is to use AWS CodePipeline to check changes periodically-

Next, I have chosen CodeBuild as build provider (can also chose Jenkins), specified the region and selected the CodeBuild project. Build stage can be skipped. I have selected single build-

Here I have used the Elastic Beanstalk as the Deploy provider. Also we can chose CloudFormation, CodeDeploy, AWS Service Catalog, ECS & S3. Deploy stage can also be skipped-

I have edited the pipeline & added a stage. Here existing stages can also be edited. I have clicked 'Add action group' to add manual approval stage. Configured AWS SNS to get an email whenever manual approval needed. Finally clicked on Done-

In case anyone skipped the build or deploy stage, they can also be added from this Edit action menu (given above). For multiple deployments (dev/test/prod) add the deployment providers by creating/editing stage and adding action group. A single stage can have multiple action groups. We can also add invoke, source, test stages. here is an example of adding another build stage-

After doing all required addition/change don't forget to click on Save (at the top right corner).

Finally the pipeline looks like this (see the Edit option at the top). Here we can disable a transition-

Now to test the pipeline, I have changed the color and added some more lines in my app. Then committed the changes to CodeCommit repository and the pipeline started automatically-

I have received an email to approve the changes-

I clicked the link and manually approved it-

Then it succeeded-

Then the changes are made like this-

Here is the review summary-

Here is the execution history-

Thanks for reading this post. In case of any feedback, please enter your comment.

Also You can connect with me on Linkedin.